Can LLMs accelerate software requirements engineering?

New research shows AI tends to outperform humans in software requirements

There's a lot of AI-bashing going on right now (And I know I've stoked the fire a bit 😅). But I still see a lot of positives in using it, especially for other areas ancillary to the actual act of coding... one area is software requirements.

Studies show poor requirements are the leading cause of software project failures (InfoTech, 2024). Other reports find that setting these guidelines up front is correlated with success. But, ironically, this is an area often minimized in project roadmaps.

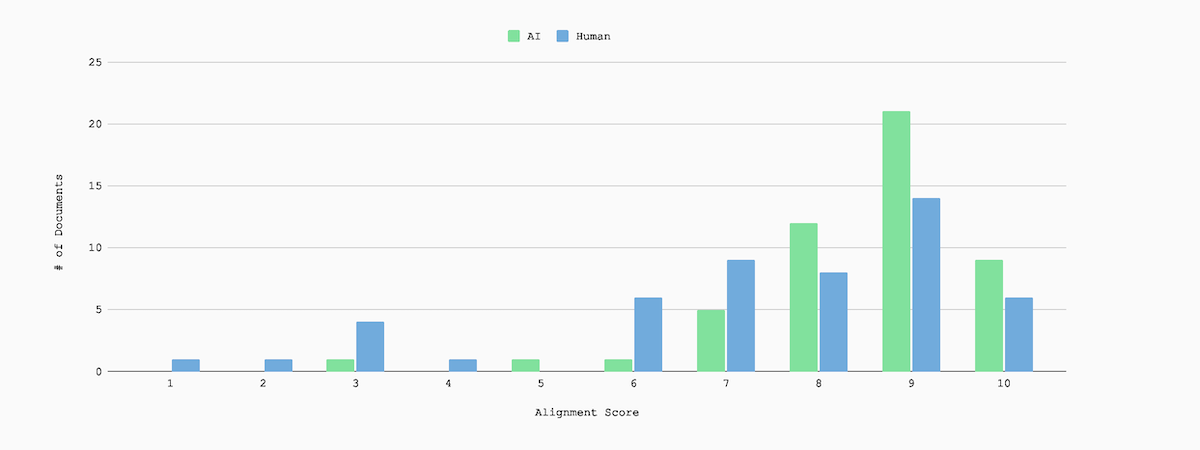

According to new research from Crowdbotics, LLMs outperform human requirements generation on average, slimming a process that takes 2-4 weeks into seconds. They tend to enhance completeness by 10.2% and lead to slightly better alignment at a fraction of the cost.

Cory Hymel, VP of Research and Innovation at Crowdbotics, sees using AI as having big implications for requirements engineering. "It'll become bifurcated like everything else — either you'll either use AI, or you'll fall behind."

Of course, human-in-the-loop matters here, as it's hard to capture everything, like environmental factors, company culture, and access to internal data. "Having humans in the mixture helps bring creativity into the mix," he says.

Just thought I'd give a shoutout to some explorations on the sidelines of AI-generated code, which seems to take up most of the literature on using LLMs in software engineering.

I'll be curious to see if engineering leaders agree. Are you seeing the benefits of embedding LLMs into the requirements engineering phase? Or does it still pose too many gaps in practice?